Custom Shadow Mapping in Unity

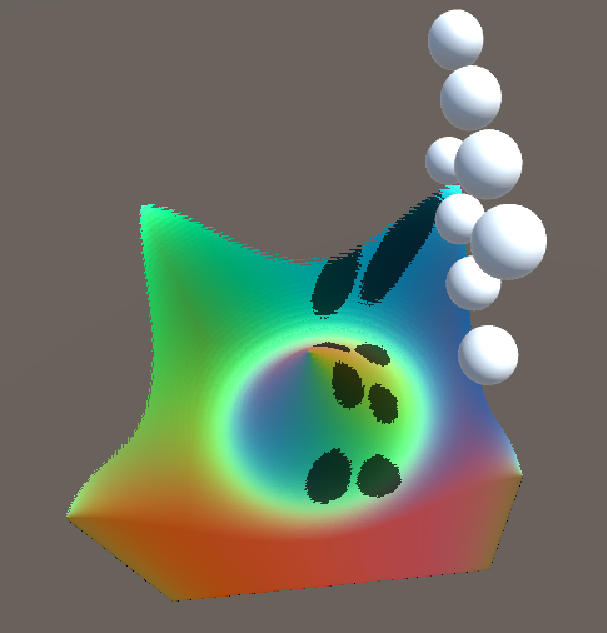

I am going to walk through how to sample your own shadows from the standard shadow mapping pipeline in Unity. My application is for casting shadows from rasterized objects on ray marched surfaces, however creating a camera for light and sampling its depth map has a wide variety of uses in real time VFX such as: stylized casted shadows; volumetric light shafts and shadows, subsurface scattering; ambient occlusion baking directly in Unity; automatically marking areas where effects such as snow and rain shouldn’t be applied because the area is under a roof/ cover and much more.

As usual, you can find the code here on Github: https://github.com/IRCSS/UnityRaymarching

If you just need to sample the already constructed screen space shadow texture, you don’t need to do much. Just look at the few lines of code I have posted on the tweet below, and you are good to go. This is also not about how to do the entire shadow mapping in Unity (creating the different shadow cascades maps). What this article is about is how to copy over the depth texture from the light, set up the correct shadow cascades coordinates and sample the depth to test for shadowing in a fragment shader.

A warning ahead, this article is going to be longer than my usual posts. At least for this topic I want to try out a more in depth explanation. Perhaps it would be more useful for people who are just getting in to this field. I am still going to separate the segments with as much clarity of titles as I can. So if I am moving too slow for a more experienced reader, you can just skip the paragraph.

What Is Shadow Mapping and Why Should I Care

Shadow mapping is very simple on paper. The technique is based on the idea that if an area is exposed to a light source, it can see the light source. In turns, the light source can also see the area. Whatever the light source can not see, is occluded by an object, which the light source can see and hence the area is in shadow casted by that object. So what we need is a camera which records what the light source can see, and using this to determine if a pixel is being seen by the light or is it in the shadow of another object. The implementation of this technique can get more challenging, if you want to cover all the edge cases and problems with shadow mapping. Before going in the technical implementation though, I would like to talk a bit about why shadow mapping is important.

In ray tracing or ray marching, getting casted shadows is relatively easy. You just send a ray in the direction of the light and see if it is being blocked or not. In a rasterized workflow though, you have the issue that per design, a fragment or a pixel program doesn’t know anything about the scene itself. It doesn’t know where the other objects are, and what relationship it has with them. It even doesn’t know its relationship with other vertices or fragments of the same object (with the exception of the fragments in its immediate surrounding, for the sake of filtering techniques). This makes a phenomenon like shadow casting a challenging problem. Because suddenly you need objects to act on each other, and effect each other’s shading (global illumination in general faces the same challenges). In general the graphic pipeline is great at fast calculation of local effects, which are homogenous across the batch and are calculated with the same algorithm, but sucks at global effects.

People have come up with several techniques to go around this. A very recent one is mixing ray tracing and rasterization to get the shadows, reflection and global illumination in real time with hardware support through DXR. Also different games have been tricking around the shadow problem. Another worthy mention would be mixing baked global illumination with clever volumes and projections placement. Example of this was done in the game Last of Us. There are tons of other tricks that only work for a specific set of criteria. One example of that is the shadow decal implementation in the game Inside. Traditionally the scene was dominated by two popular techniques, Shadow Volumes and Shadow Mapping.

First, a bit about Shadow Volumes. Why? Because understanding the technique’s limitation makes it clear why Shadow Mapping is so widely used. Shadow Volumes is based on the idea of extruding out the shape of the occluders in the direction of the light, and creating a volume of shadows. This volume is typically marked in screen space in the stencil buffer (for more on this, look at this GPU gems chapter form Morgan McGuire). Since this is done in screen space, you have the main advantage of the Shadow Volumes, that it creates pixel perfect shadows that don’t require antialiasing and are at maximum required resolution. The main disadvantage of the technique was that it caused fill rate issues to the depth/ stencil buffer (same physical memory in the GPU). Also soft shadows were traditionally not included (I have seen some papers on that, but haven’t read any of them). Also shadows always had to take on the shape of the casting volumes (some issues with transparency too). However, even with the disadvantages, the Shadow Volumes technique is used in games, the most notable example being Doom 3. Here is a lovely email from John Carmack on Shadow Volume implementation in Doom 3 documentation.

Shadow Mapping has the advantage that the shadows can be accumulated in a screen space texture, that can be blurred to imitate soft shadows. It is also simple in its implementation and easily allows things such as turning off shadow casting or receiving for some of the objects in the scenes, applying image effect on the texture for stylized shadows or having shadows casted other than the actual shapes of the mesh casting the shadows (leaves, trees, bushes and such). There are many other comparison points between them, however I have never implemented a Shadow Volume so I can’t talk about their performance, ease of coding, etc.

The main disadvantage of Shadow Mapping, at the very least for me, has always been resolution (if we ignore the performance of extra depth pass for the main camera and each light, which requires alot of draw calls). You never have enough resolution. Techniques have been created to deal with this. In a perspective projection, objects closer to the camera need higher resolution than those further away. Based on this fact, people have come up with ideas such as shadow cascades. This is explained very well in Unity’s own documentation. This is still not enough to create the pixel perfect shadows of Shadow Volumes, but it is better than nothing. Another issue is aliasing. Again filtering comes to the rescue, and with techniques such as Percentage Closer Filtering this problem has been somewhat mitigated.

Now having explored a bit how Shadow Mapping fits in the grand scheme of things, let’s have a look at the general steps which are taking in shadow mapping.

Shadow mapping Implementation, a Top Down Look

The goal is to know for each fragment which is currently being rendered, if this fragment is being occluded by another object in the scene in relation to the light source. For simplicity, I am going to assume there is only one directional light source in the scene. Step by step:

- A visible set needs to be determined for directional light’s camera

- The depth of each fragment of the visible set is rendered to a buffer by the light camera. The depth is of course in relation to the light camera. This is also where different resolution of depth maps are rendered in different shadow cascades

- The main camera renders the depth of each fragment it sees, in relation to itself

- In the shadow collecting phase, first using the depth map of the main camera, the world position of each fragment is reconstructed

- Then using the VP matrix of the light camera (projection*view), the pixel is transferred from world space coordinates to the homogeneous camera coordinate of the light camera. In this coordinate system x and y correlate to the width and height position of the fragment in the light camera texture and z is the depth of the fragment in the light camera. We have successfully converted the fragment coordinates in a system where we can compare its depth with an occluder’s depth and determine if the occluder is occluding the fragment.

- At this stage, it is time to unpack the depth value we rendered in stage 2. If there are no shadow cascades, we can simply remap the homogeneous coordinates we calculated from -1 to 1 to 0 to 1 and sample the light camera’s depth texture. However with shadow cascades this is a bit different, since we need to first choose the correct shadow cascades texture to sample and calculate the uv coordinate for that specific texture.

- Once that is done, we sample the light camera’s depth texture. Then we compare the z value we calculated at stage 5 with the depth value we just retreated. If the depth value of the depth texture is smaller than our z value, it means the fragment is occluded and is in shadow. The logic here is that smaller depth value means that there exists a fragment, along the path of the fragment we are rendering to the light, which is closer to the light than the fragment we are rendering and hence it is occluded by whatever fragment the light is seeing. (Of course you need to keep an eye on reversed Z buffer and such)

These were the general steps. Now for the implementation in Unity.

Implementation in Unity

I am not going to cover step one and two in great detail. They require a few blog posts of their own. I refer the interested readers to other sources, specially for step two, giving this entry by CatLikeCoding a read wouldn’t be bad.

One thing worth mentioning is that there are different ways to do both steps. How one engine packs its shadow cascades is different to how another might. Also step one is by no means trivial. Knowing which object casts a shadow on your main camera’s frustum is not as simple as just rendering whatever your main camera sees. What your main camera doesn’t see might also cast shadow on visible area. I don’t know how Unity calculates the visible set for the light camera, but generally this is done through a conservative estimation by projecting a simple bounding volume on the main camera’s frustum (popular is also to do the projections on several 2D planes and do the checks there to reduce complexity).

On console and pc, Unity collects the shadows on screen space in a texture before the main pass. This has the advantage that the shadows can be blurred to different degrees before hand, and in the main pass you just sample what you need. Because of this reason the original depth map of the light source is no longer available by the time you get to rendering your fragment in the main pass. So the first thing we need to do, is to copy this texture somewhere for later use in the pipeline.

You can do this via a command buffer. The light camera in Unity, like all other cameras in the engine, has the ability to receive command buffers which it can execute at certain points in its own rendering events. You need a code like this on your light source gameobject:

cb = new CommandBuffer;RenderTargetIdentifier shadowmap = BuiltinRenderTextureType.CurrentActive;

m_ShadowmapCopy = new RenderTexture(1024, 1024, 16, RenderTextureFormat.ARGB32);

m_ShadowmapCopy.filterMode = FilterMode.Point; cb.SetShadowSamplingMode(shadowmap, ShadowSamplingMode.RawDepth);

var id = new RenderTargetIdentifier(m_ShadowmapCopy);

cb.Blit(shadowmap, id);cb.SetGlobalTexture(“m_ShadowmapCopy”, id);Light m_Light = this.GetComponent<Light>();

m_Light.AddCommandBuffer(LightEvent.AfterShadowMap, cb);

So you create a command buffer, which copies over the active camera texture (at that point the light camera texture), copies it over in a different memory and makes it accessible as a global texture for all coming shaders in the pipeline. Just make sure you set the sampling mode to RawDepth.

On some platform, you can simply write to the depth buffer and sample from it with a different sampler like Shadow2D. In some you can’t and you need to resolve your depth in an accessible texture for later use. I am not sure how Unity handles the different platforms and which effect it has on the code in this blog post, so always follow the documentation of the engine and try to keep as close as possible to their way of doing things.

Now you have the depth map, time to sample from it in a fragment shader. First in your main pass, as you are rendering your fragments you need to construct their world position in the fragment shader. Different ways to do this, for example this one presented by Making Stuff Look Good in Unity.

In my case I don’t need to since I am raymarching. The world position of my fragment is returned by my surface function. Next step is to convert this from world position coordinates to the homogeneous camera space of the light camera. To do that we need the VP matrix of the light camera. If you set up your light camera your self, this would be something like:

YourCamera.projectionMatrix * YourCamera.worldToCameraMatrix;But if you want to use the standard Unity’s light camera texture, you don’t need to construct this your self. Unity has saved this matrix in an array of matrices called unity_WorldToShadow. Each entry of the array is the transformation matrix necessary to convert your world position to the UV of each of the 4 cascades Unity supports. The question now is which cascades do you need?

Before we get to that, a tip when you are digging around Unity’s graphic pipeline. You might find a parameter or a macro online which supposedly holds what you need, but when you try using it, you get a not defined error or a black render target. In cases like this, first find out which CG Include file has defined the macro you want. Include that CG file (for example AutoLight or Lighting). Then if you look at the CG file you see macros are defined differently under different conditions (graphic API, platform, quality settings etc.) and parameters are not always populated correctly. You need to let Unity know which type of pass you are going to code, so that it can set up its macros properly for you. You do this by adding a tag to your pass. For example you set the LightMode as Forward base.

Now back to cascades. If you go to your Quality Settings, you can see how the shadow cascades are setup. For this code, I am assuming there are 4 cascades set up, if you are using 2, you need to adjust the code. There you see a shadow draw distance, and a series of numbers for each cascades. Let’s assume your shadow draw distance is 40, and your first cascades takes the first 20 percent of your camera frustum. If your fragment has a distance less than 8 meters from your camera, you need to sample from cascades one. If your second cascades is responsible for 20 to 40 percent of the frustum, then as long as your fragment has a world distance of 8 to 16, you need to sample cascades two and so on. Unity puts the beginning and end of each cascaded region conveniently in two float4 parameters called _LightSplitsNear and _LightSplitsFar. Now you can just branch here and have a case for each cascades but the way Unity does it is a clever trick to avoid that. More on that in this blog entry from Aras. First here is the code:

float4 near = float4 (vDepth >= _LightSplitsNear);

float4 far = float4 (vDepth < _LightSplitsFar);

float4 weights = near * far;VDepth in my case is the distance from the camera in world space. The _LightSplitsNear contains the near planes (starts) of each cascaded regions. The four floats correspond to the beginning values of the four cascaded in world space units. The _LightSplitsFar is the same but for the far plane. So _LightSplitsNear.x and _LightSplitsFar.x give you the first cascaded region from beging to the end in world space units.

What float4 near does is checking if the correct distance to the camera is greater than the near plane for each cascades frustum. Going back to our example of 40 meter draw distance, and let’s say shadow cascades regions of 20, 40, 60 and 80, the near calculation for a fragment that is 15 units away would give you a float4 that has (1,1,0,0).

Far does the opposition for far distance. It checks if the fragment is closer than the far plane of each cascades. So for the same fragment you would gat a Far value of (0,1,1,1).

The weights now is like a logic operation. You will only have 1 on a entry where it is both closer than far plane, and further away than near plane (so is in that cascades frustum). In the case of the fragment that is 15 units away, your weight would be (0,1,0,0), so cascades number two is our pick.

To get the final coordinate, you can simply use the weight to blend between the different cascades uv coordinates you calculate with _WorldToShadow.

float3 shadowCoord0 = mul(unity_WorldToShadow[0], float4(outS.p.xyz, 1.)).xyz;

float3 shadowCoord1 = mul(unity_WorldToShadow[1], float4(outS.p.xyz, 1.)).xyz;

float3 shadowCoord2 = mul(unity_WorldToShadow[2], float4(outS.p.xyz, 1.)).xyz;

float3 shadowCoord3 = mul(unity_WorldToShadow[3], float4(outS.p.xyz, 1.)).xyz;float3 coord =

shadowCoord0 * weights.x + // case: Cascaded one

shadowCoord1 * weights.y + // case: Cascaded two

shadowCoord2 * weights.z + // case: Cascaded three

shadowCoord3 * weights.w; // case: Cascaded four

Now you have your coordinate system to sample the camera depth texture we binded before globally.

float shadowmask = tex2D(m_ShadowmapCopy, coord.xy).g;We are almost done, we have all the information we need. We know how close the nearest pixel to the camera is along the light ray, and where our fragment is on that line. We can simply compare them and use the result as we wish.

shadowmask = shadowmask < coord.z;

col *= max(0.3, shadowmask);There is a still a lot you can do. If you wish to do something about the aliasing, look in the macros in the Unity Shadow Library CG include file (around line 50), there are already macros for that. Make sure to use them to keep cross platform support or potentially even hardware acceleration for some stuff. Also like Unity you can collect your shadows on screen space in a separate pass, and blur them down for some fun faked global effects.

Hope you liked the article, you can follow me on my Twitter IRCSS.

Further Readings, References and Thanks

This time I am not repeating all the links in the article here, since there are way too many. Just the two that helped me get this set up and two GPU gems article that further show what you can do if you sample the light camera’s depth pass.

Good article on volumetric rays by Kostas Anagnostou: https://interplayoflight.wordpress.com/2015/07/03/adventures-in-postprocessing-with-unity/

Good post from Aras on deferred shadowing https://aras-p.info/blog/2009/11/04/deferred-cascaded-shadow-maps/

GPU Gems article on subsurface scattering. They use the depth map from the light camera too. Using similar code path as described here, you can get the same effect: https://developer.download.nvidia.com/books/HTML/gpugems/gpugems_ch16.html

GPU Gems article on baking ambient occlusion technique. This was the original technique developed internally by Industrial Light and Magic. It features a variation of the technique that uses many light cameras with depth maps to get a ground truth: https://developer.download.nvidia.com/books/HTML/gpugems/gpugems_ch17.html